OVERTURE

To cache, or not to cache, that is the question:

Whether ’tis user in the mind to suffer

The slings and ah-rows of outrageous waiting,

Or to take arms against a sea of rows

And by opposing cache them. To die—to sleep; or not.

OVERVIEW

I’ve been fascinated by caches since the mid-2000s when I was working on a big middleware project that suffered an unbearable UX due to a very slow ODBC driver. This was of course before the advent of cloud applications. The solution devised was to introduce a cache layer in the middleware to sit between the client application and the DB server. We went from multi-seconds response time to tens of milliseconds, a hundredfold improvement. The cache used (ehcache); and the middleware using it, are still being used today.

When I talk about caching, I am not referring to the normal use of PXCache used in graphs but rather to the so-called Slot caching mechanism found in class PX.Data.PXDatabase. If you talk to some developers, many will say, “don’t ever do that” or “that it is not recommended.” I disagree with these assertions, especially since the Acumatica out of the box code uses plenty of these caching strategies. This is even more often the case with the use of the PXSelectorAttribute.

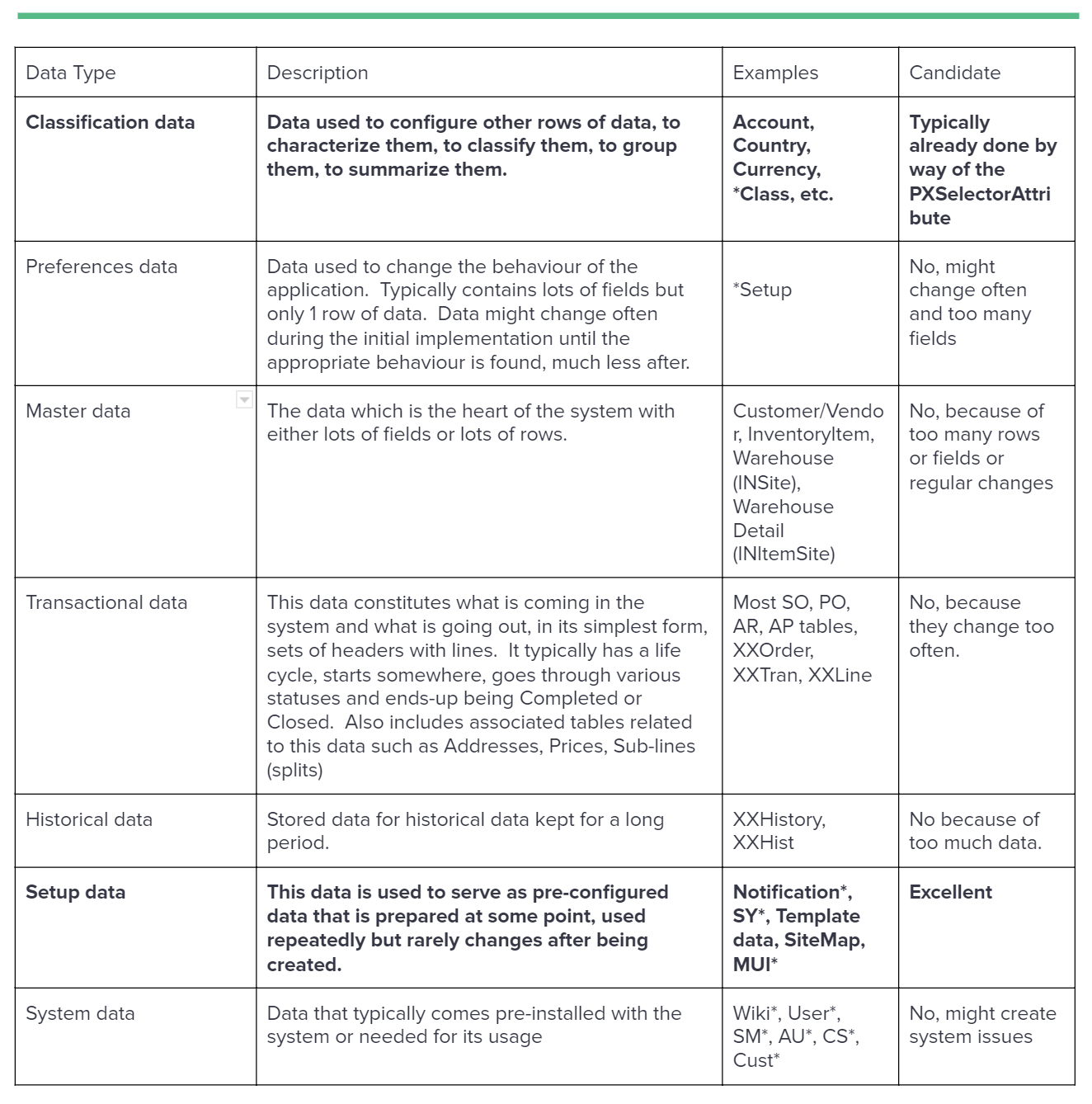

WHAT TO CACHE AND WHAT NOT TO

It is important before thinking about caching data, whether it is appropriate to do so or not. Rows of data are all not the same. Therefore, let’s discuss what they are and if they are good candidates for being cached. Here are a few types of data we could think of:

REASONS TO CACHE

- Data is needed often and does not change much

- A small subset of fields is needed for all the rows of a large table

- A big list of Classes (Types) are searched in the list of assemblies and will not change after system start. Ergo: the list of PXGraphs or a list of processors implementing a particular interface

- No easy access to a graph or too costly to do so to read data

- When multiple reads would be needed to find an appropriate match (based on a multi-field mapping)

- When small data sets are needed in high rate millisecond processing.

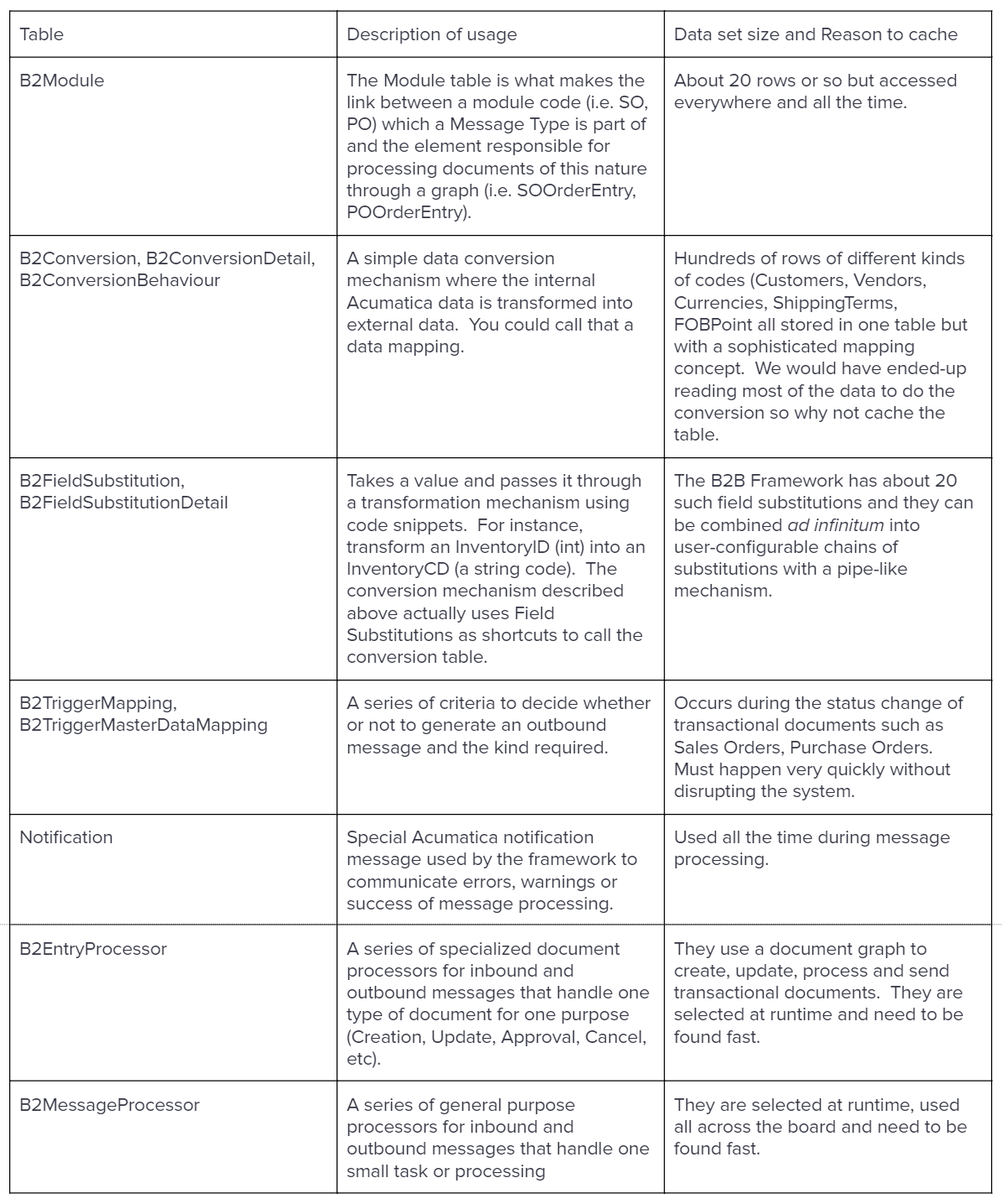

B2B/EDI FRAMEWORK

During the course of the development of a B2B/EDI Framework for one of our Acumatica customers, we ended up finding many suitable areas that would benefit by caching. The framework we developed is used for synchronizing data and documents from external sources to Acumatica or vice versa. It uses lots of configuration data that once setup, will most likely not change for a long time. The approach we took allowed us to create many small message/data processors all called in a given sequence and these numerous processors are typically specialized in doing one thing and one thing only, similar to chefs in a kitchen, where one is responsible for grilling, the other for sauce, one for salads, one for pastries, one for cutting meat, etc. The processors are stateless and are installed (by using Reflection discovery) during the publishing of the customization and are tied to a small configuration row. In the context of message processing, we also use numerous transformations and data conversion mechanisms which are all user-configurable.

Given the sheer volume of transactions and transformations, we needed a way to call those processors/conversions in rapid succession without incurring the cost of DB queries. Furthermore, for the outbound message template definitions, we needed to extract the various tables and fields used by the system graphs to allow us to quickly read them to generate an outbound template which is the key driver of the outbound transactions.

THE SLOT MECHANISM

The caching mechanism in this case is the one found in the PX.Data.PXDatabase class. What is so special about the slot-caching mechanism:

- Stores your data in thread safe dictionaries

- Stores data by your defined key so you can restrict who accesses your cached data

- Can store and retrieve data selectively using a Parameter (a class/type to represent and access a subset of the data)

- Stores data by company (each has its own cache)

- Automatically calls a Prefetch delegate on the first access to cached data

- Automatically monitors dependent tables (that you configure) and will reset the cache when someone has updated one of the dependent tables

- Automatically handles the cluster mode (uses of dependent tables across clusters).

Let’s take a look at the various methods used in this particular case in our code:

GIST: https://gist.github.com/ste-bel/45a9e58f89054a70a76520137e825322

TABLES INVOLVED

In the next section, we will take a look at some of the tables involved in the caching of the B2B Framework.

THE CODE

A POCO to store/organize my data

First, I create a POCO to store my cached data. Acumatica does not recommend creating constructors in DACs and they are quite heavy in nature. Therefore, I prefer to use my own lightweight POCOs. I also implement IEquatable<> and override GetHashCode() so that my searches are more efficient.

GIST: https://gist.github.com/ste-bel/cd07f0bc9810b11ead1aafa818606765

A helper class to store and search for data

Second, I create a helper class to simplify the code loading and searching of the data. I can also encapsulate and improve my search algorithm without disturbing other code. You can see this piece of code as a data bucket. You could also use a hierarchy of helpers/buckets in order to bubble up searches and reduce your overall coupling from the code using the initial helper.

GIST: https://gist.github.com/ste-bel/26088249b9b584fce94af07631c7393c

The slot data loader

The last piece of code you create a class implementing IPrefetchable to read the data by using the PXDatabase methods without the need for a graph. I also use a dictionary to store my helpers/buckets and subdivide my data in this case by some key such as a ConversionID or a ClassID.

In my Prefetch method, I clear my helper dictionary then I read the data and I populate my dictionary with all the helpers.

Afterwards, I provide some static methods to retrieve helpers by their ID and I use the helper to search for data.

GIST: https://gist.github.com/ste-bel/c3d8a380c88d5ff71fbc6102d4d9539a

The data loader usage

To use the data loader, you simply talk to your loader to get the helper, then you use the helper to convert the data.

GIST: https://gist.github.com/ste-bel/96f6b77a93c14ed8cd70db21b4bfab92

CONCLUSION

At first, it may seem like a lot of work to cache and retrieve data. However, once you get used to it, you can do this type of code in half an hour and the improvement of speed is considerable. By using the Yaql classes (Yet another Query Language), you can also read more than one table and use complicated conditions.

See this GIST for more details: https://gist.github.com/ste-bel/a27c51dd4234d88c7d098b161b3d9386

I wish you well in your caching endeavors and happy coding!

Canada (English)

Canada (English)

Columbia

Columbia

Caribbean and Puerto Rico

Caribbean and Puerto Rico

Ecuador

Ecuador

India

India

Indonesia

Indonesia

Ireland

Ireland

Malasya

Malasya

Mexico

Mexico

Panama

Panama

Peru

Peru

Philippines

Philippines

Singapore

Singapore

South Africa

South Africa

Sri-Lanka

Sri-Lanka

Thailand

Thailand

United Kingdom

United Kingdom

United States

United States